16 Dec 14 Technical SEO Takeaways from TechSEOBoost via @navahf

TechSEOBoost is a tech SEO’s paradise: incredibly technical and actionable sessions inspiring innovative approaches and empowering solutions.

What was a PPC person doing there!?!?!

Usually, if there’s a PPC track, the PPC folks will go there. If there’s an SEO track, the SEO folks will go there.

Too long have the silos between SEO and PPC blocked empathy and knowledge shared between the two disciplines.

This PPC marketer was curious to understand what pain points and innovations our counterparts were exploring.

One of the best parts of the conference was seeing how many parallels there are in where SEO and PPC are evolving.

If there’s one focus point we can all agree on, it’s audiences and understanding the shifting desires in audiences.

The other takeaway weaving its way through most talks was to share data and make marketing a truly cross-department initiative.

Each speaker had great takeaways – here’s the round-up of the main takeaway from each presentation:

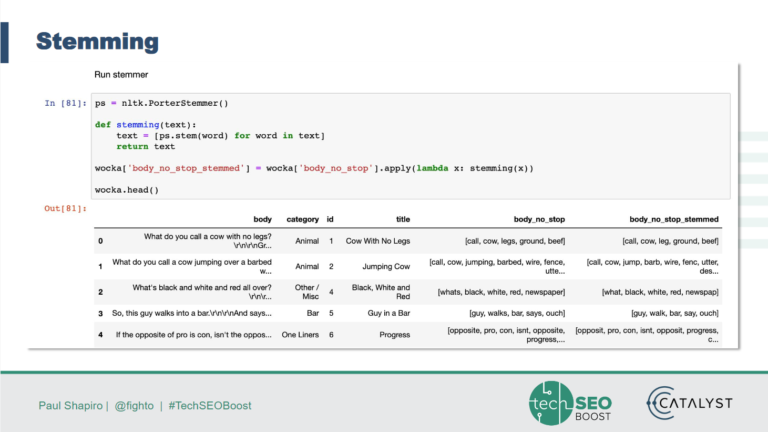

1. NLP for SEO

Pragmatism is a beautiful trait, and Paul Shapiro led a great discussion on how to decide which parsing method would serve you best.

In the spirit of pragmatism, Python was a requirement for this talk.

While Stemming is faster, it can be messy and leave partial words.

Lemmatization, while more accurate takes more time.

When deciding how you’ll parse, consider the scope of the content and whether the intent could be lost by taking the faster Stemming route.

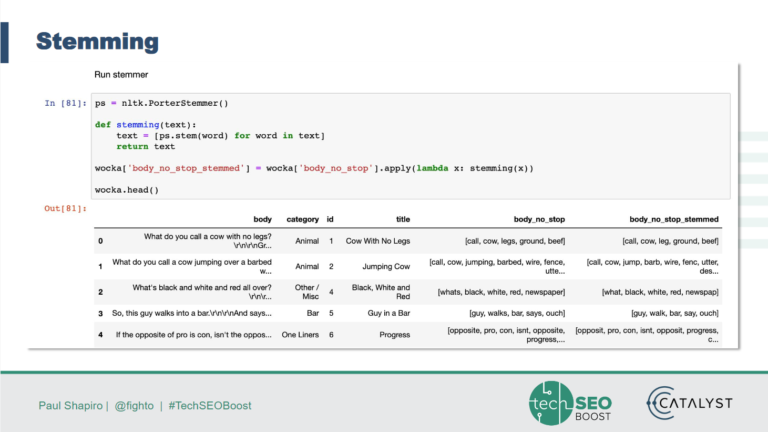

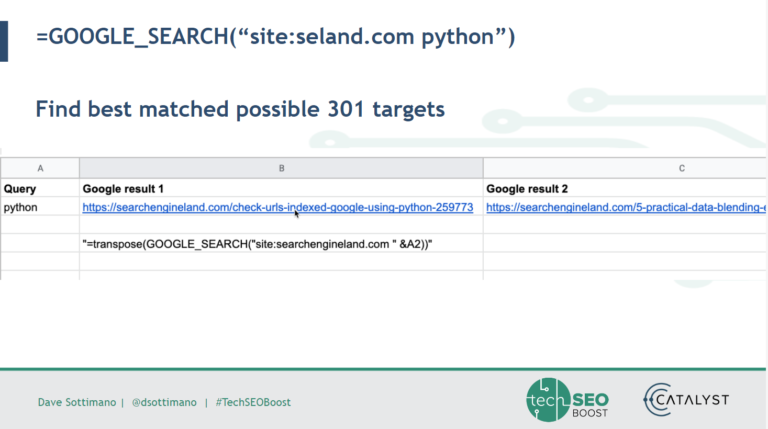

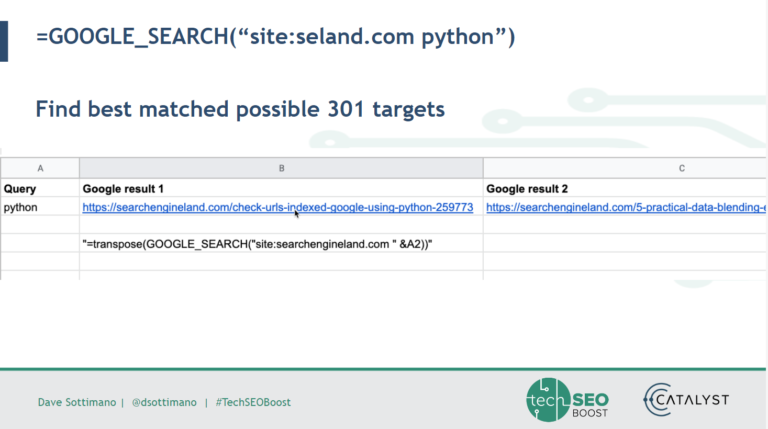

2. Automate, Create & Test with Google App Scripts

This session solicited audible excitement for good reason: David Sottimano gave us easy hacks to easily analyze 10 BILLION rows of data without SQL!

The “secret” is Sheets data connector and the implications are exactly as exciting as they sound!

Sottimano outlined the following use cases:

- Clean and manipulate data quickly in sheets.

- Parse URLs quickly.

- Scraping Google via SEPapi.

- Creating your own auto-suggest.

For everyday SEO, these practical use-cases were suggested:

Checking for indexing and 301 targets in the same action:

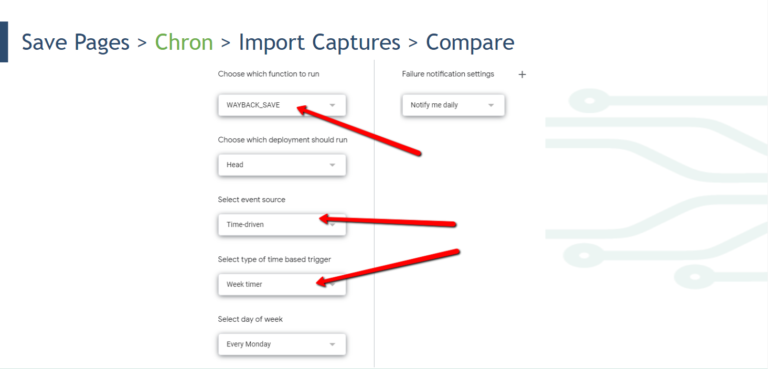

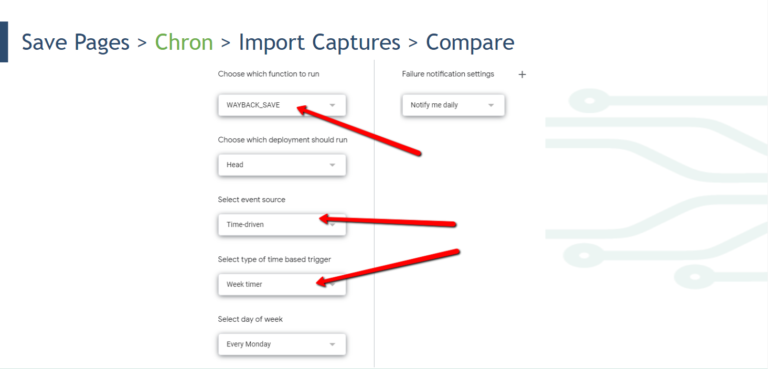

Monitoring pages, comparing content and caching:

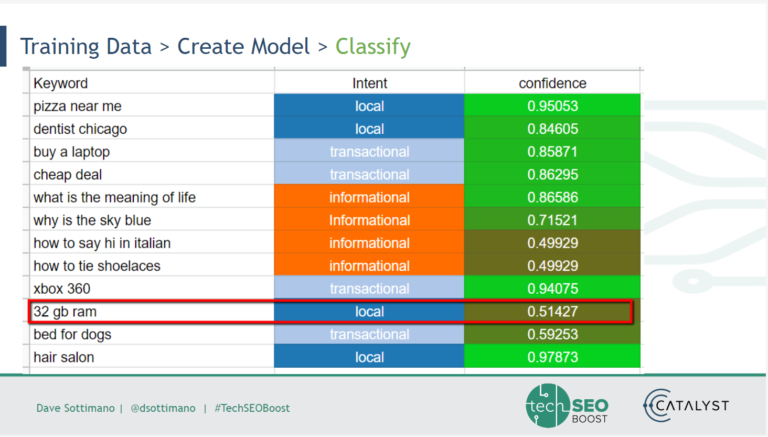

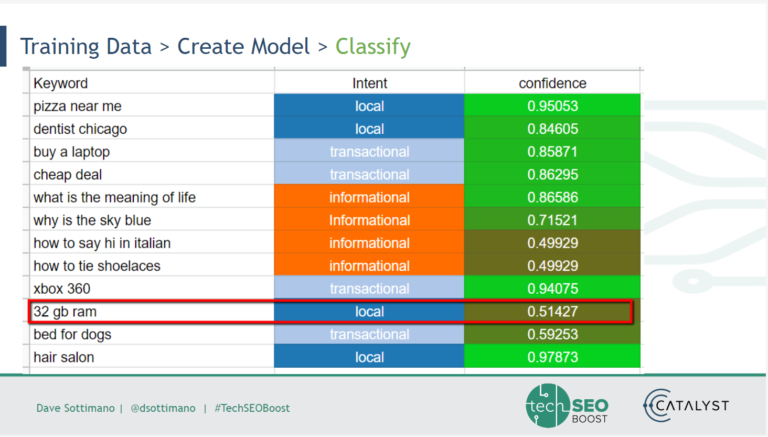

Machine learning classification using bigML and SEMrush keyword data:

3. When You Need Custom SEO Tools

The first panel of TechSEOBoost focused on knowing when third-party tools might not be enough and it makes sense to invest in proprietary tools.

The panel consisted of:

- Nick Vining: Catalyst (moderator)

- Claudia Higgins: Argos

- Derek Perkins: Nozzle

- Jordan Silton: Apartments.com

- JR Oakes: Locomotive

While the panelists each had their unique perspective to share, the overarching theme they focused on was cross-department empathy and data access.

Investing the time and resources to build a custom solution may seem daunting, the panelists all agree that having a single source of digestible truth more than pays for it.

Specific soundbites we call can learn from:

Higgins discussed shedding fear around building a custom solution/thinking it’s only possible if you have a really technical background. Don’t allow lack of tech chops get in the way of you solving a problem you know needs solving!

Stilton gave really good pragmatic advice on never landing on the first solution you come up with. Always challenge yourself to think through at least 2-3 additional solutions so you don’t eat dev resources on a near-miss tool.

Oakes empowers us to use usage as a good metric to decide if a tool is outdated, as well as never build unless there’s a clear understanding of the outcome.

Perkins reminds us to hold off on automating a function/data set until it happens at least three times. Any less than three and the sample size and data focus will be compromised.

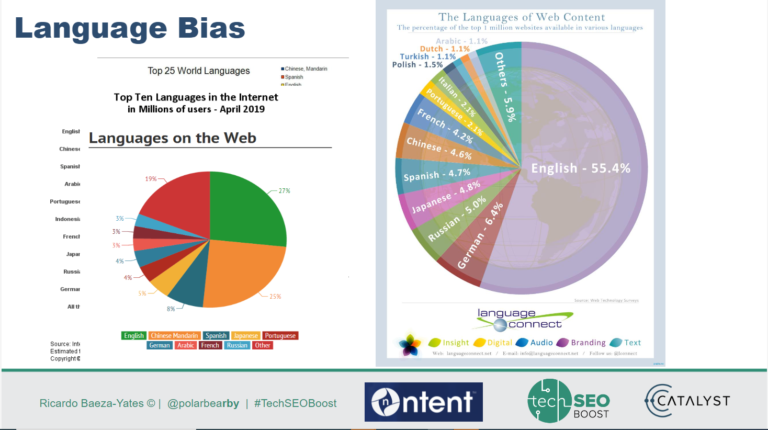

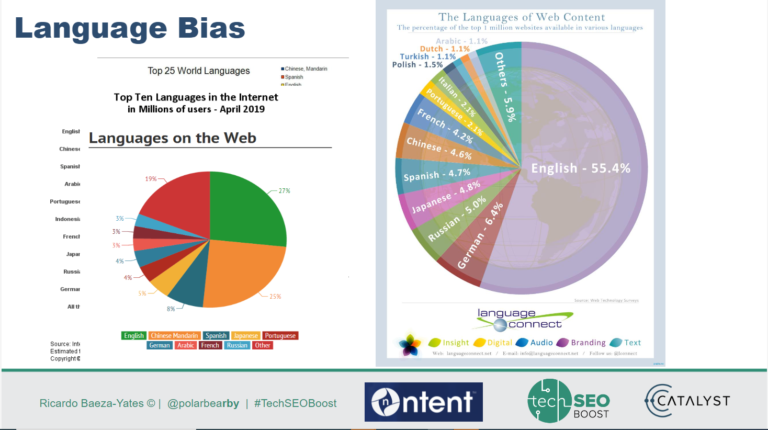

4. Bias in Search & Recommender Systems

To be human is to have bias – and the impact of those biases are felt in our careers, purchases, and work ethic.

Ricardo Baeza-Yates outlined three biases that have far-reaching implications:

- Presentation bias: Whether a product/service/idea is presented and can, therefore, be an eligible choice.

- Cultural bias: The factors that go into work-ethic and perspective.

- Language bias: The amount of people who speak the language most content is in.

Presentation bias has the biggest impact on SEO (and PPC). If you’re not presented during the period of consideration, you’re not going to be chosen.

It’s not sustainable to own everyone’s presentations bias, so we must understand which personas represent the most profit.

Once we’re in front of our ideal people, we must know how to reach them.

Enter culture and language bias.

Baeza-Yates translates culture bias as living on two scales: minimum effort to avoid the max shame.

Depending on the market, you’ll need to tailor your messaging to honor higher/lower work ethics.

Language bias is an insidious one – the majority of content is in English, but only 23% of the internet accessing world speaks English.

This gives an unfair advantage to a select group – and given that online translators can’t always capture true intent, there’s high-risk content won’t be crawled and indexed properly.

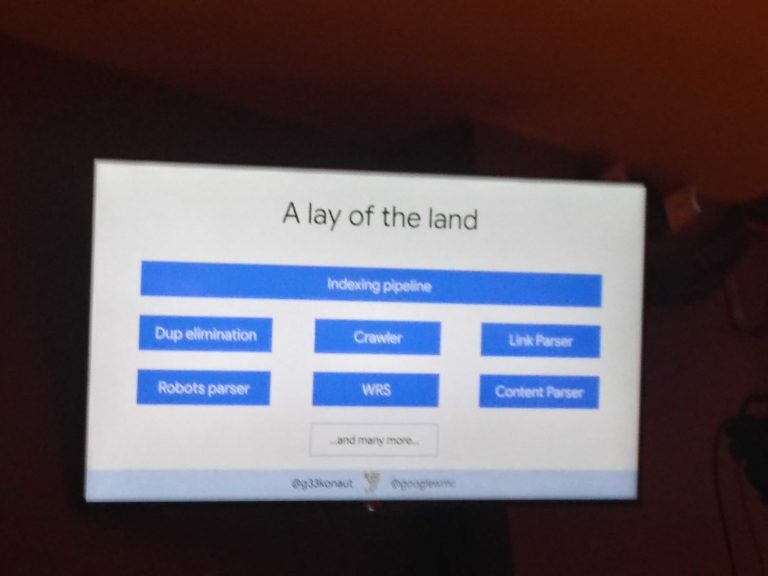

5. GoogleBot & JavaScript

Whenever a Googler shares insights, there’s always at least one nugget to take home.

The big takeaways from Google’s Martin Splitt included:

- Google knows where iframes are and odds are it is making it into the DOM.

- Avoid layout thrashing – it invites lag time in rendering.

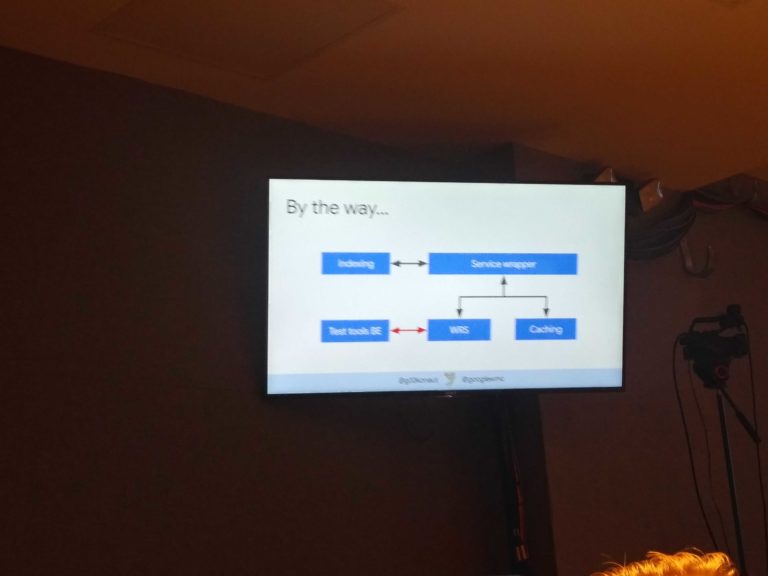

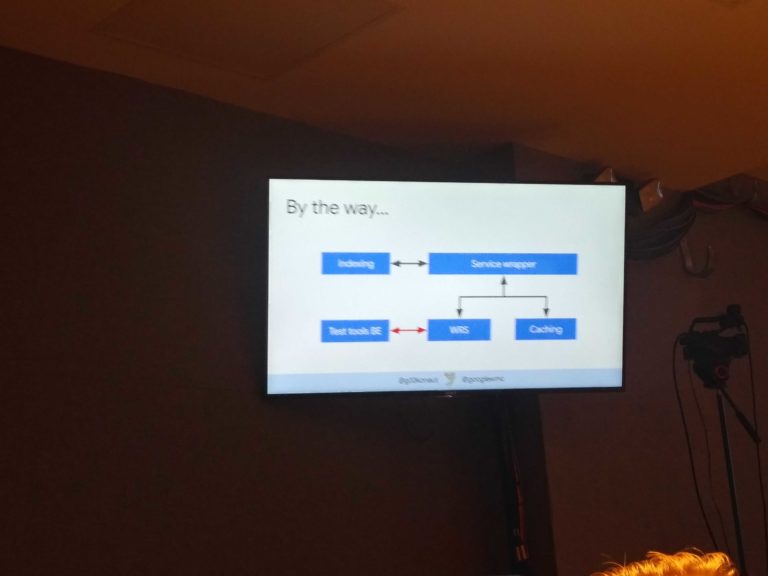

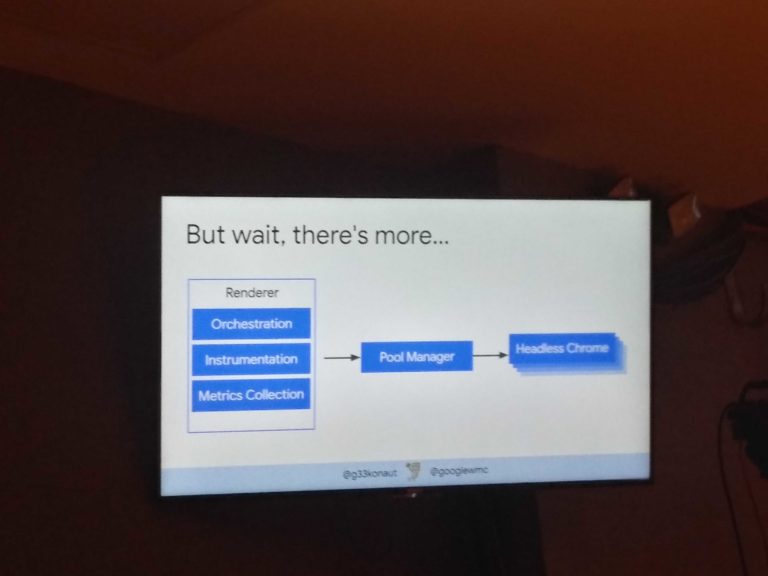

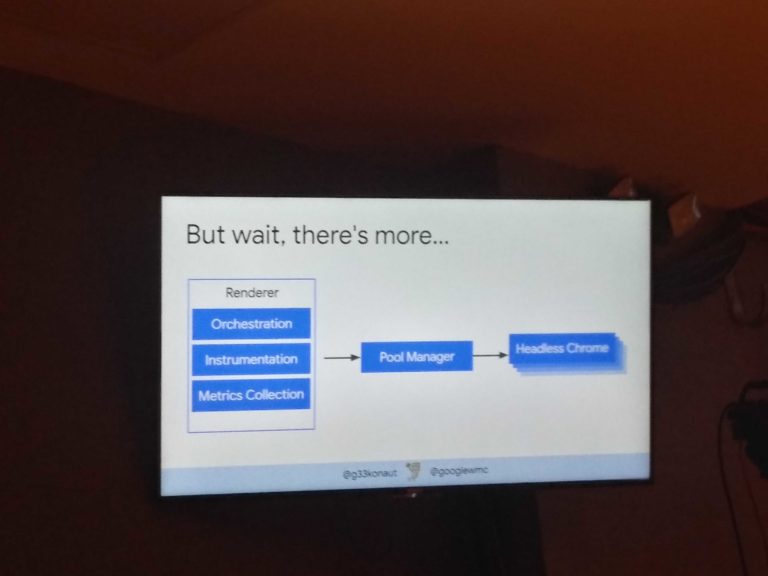

- WRS is simply HTML + content/resources: That’s your DOM tree.

- Google doesn’t just rely on an average timeout metric – they balance it with network activity.

- Mobile indexing has tighter timeouts.

- If a page can’t render correctly due to a “Google” problem, they’ll surface an “other” error.

- Consider which side of the devil’s bargain you want to be on: if you bundle your code you’ll have fewer requests, but any change will require re-uploading.

- Only looking at queue time and render time will lead you down the wrong path – indexing pipeline could be the issue.

I will admit as a PPC, most of this didn’t have the “shock and awe” for me as it did for the rest of the room. That said, one big takeaway I had was on page layout and the impact on CRO (conversion rate optimization).

The choices we make to optimize for conversions (page layout, content thresholds, contact points, etc.) align more than I would have assumed with the Google SEO perspective.

That said, the tests needed in both disciplines confirm the value of dedicated PPC pages and the importance of cross-department communication.

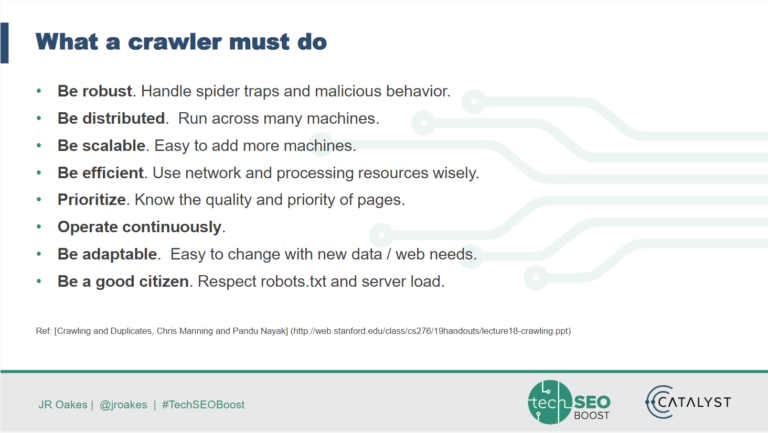

6. What I Learned by Building a Toy to Crawl Like Google

It’s easy to complain and gloat from the sidelines. It takes a brave and clever mind to jump in and take a stab at the thing you may or may not have feelings about.

JR Oakes is equal parts brave, clever, and generous.

You can access his “toy crawler” on Github and explore/adapt it.

His talk discussing the journey focused on three core messages:

- If we’re going to build a crawler to understand the mechanics of Google, we need to honor the rules Google sets itself:

- Text NLP is really important and if honoring BERT mechanics, stop words are necessary (no stemming).

- Understanding when and where to update values and is far harder than anticipated and it created a new level of sympathy/empathy for Google’s pace.

The main takeaway: take the time to learn by doing.

7. Faceted Nav: Almost Everyone Is Doing It Wrong

Faceted navigation is our path to help search engines understand which urls we care they crawl.

Sadly, there’s a misconception that faceted navs are only for ecommerce sites, leaving content rich destination sites exposed to crawl risk.

Yet if every page gets faceted navigation, the crawl will take too long/exceed profit parameters.

Successfully leveraging faceted navigation means identifying which pages are valuable enough to “guarantee” the crawl.

As a PPC, I loved the shout-out for more collaboration between SEO and paid. Specifically:

- Sharing data on which pages convert via PPC/SEO so both sides know how to prioritize efforts.

- Query data that leads to valuable vs near miss users.

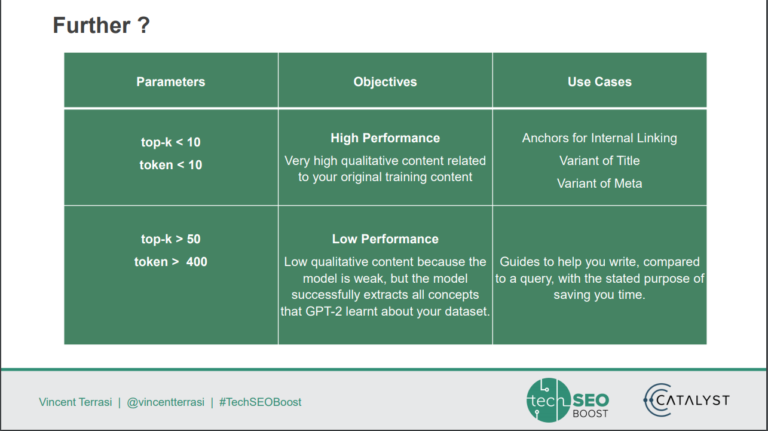

8. Generating Qualitative Content with GTP2 in All Languages

Nothing drives home how much work we need to do to shatter bias, than translation tools. Vincent Terrasi shared the risks of being “complacent” in translation:

- Different languages have different idioms/small talk mechanics

- Gender mechanics influence some languages while have no baring on others

- Rare verbs, uncommon tenses, and language specific mechanics that get lost in translation.

The result: scaling content generation models across non-English speaking populations fails.

Terrasi won’t let us give up!

Instead, he gave us a step by step path to begin creating a true translation model:

- Generate the compressed training data set via Byte Pair Encoding (BPE).

- Use SenencePiece to generate the BPE file.

- Fine tune the model (slide)

- Generate the article with the trained model

You can access Terrasi’s tool here.

Where I see PPC implications is in ad creative – we often force our messaging on prospects without honoring the unique mechanics of their markets. If we can begin to generate market specific translations, we can improve our conversion rates and market sentiment.

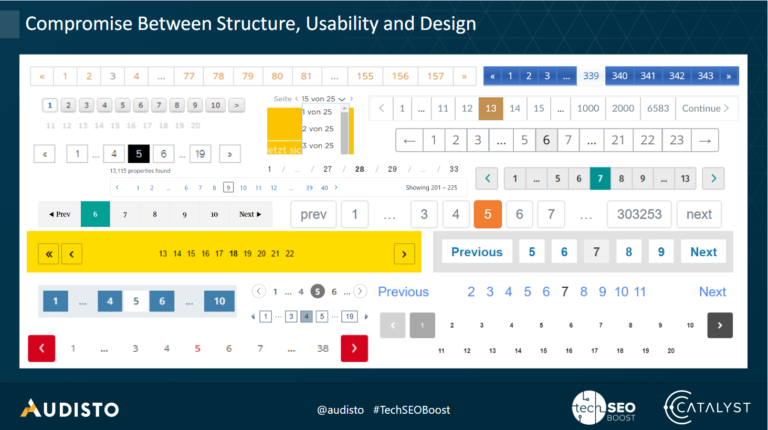

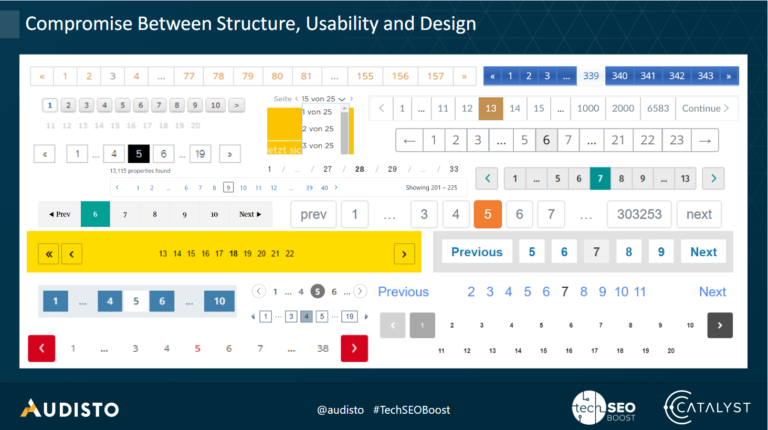

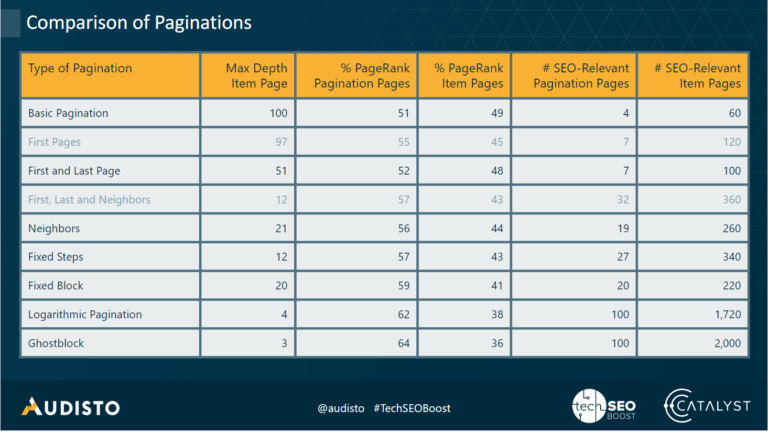

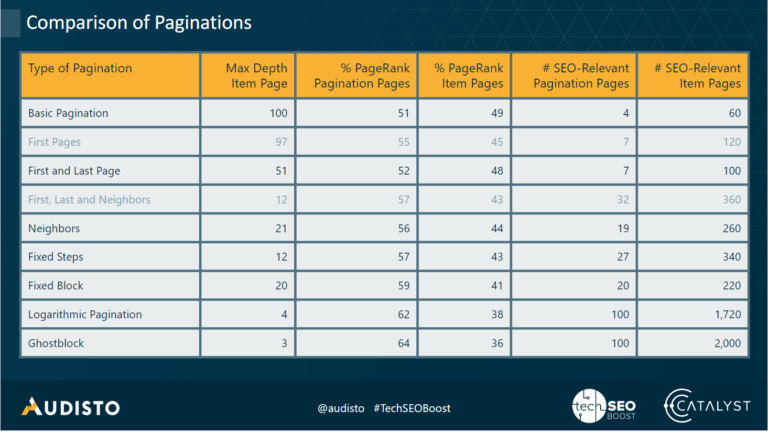

9. Advanced Data-Driven Tech SEO – Pagination

Conversion rate optimization (CRO) is a crucial part of all digital marketing disciplines.

Yet we often overlook the simple influencers on our path to profit.

One such opportunity is pagination (how we layout the number of pages and products per page).

The more pages clients have to go through to reach their ideal product/content, the greater the risk for mental fatigue.

While there are pros and cons to all forms of pagination, Ghost Block far and away did the best job of honoring user and crawl behaviors.

Here are the outcomes of all pagination formats:

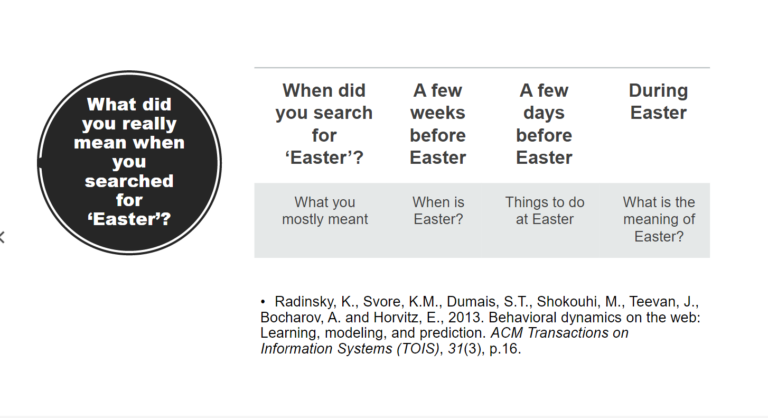

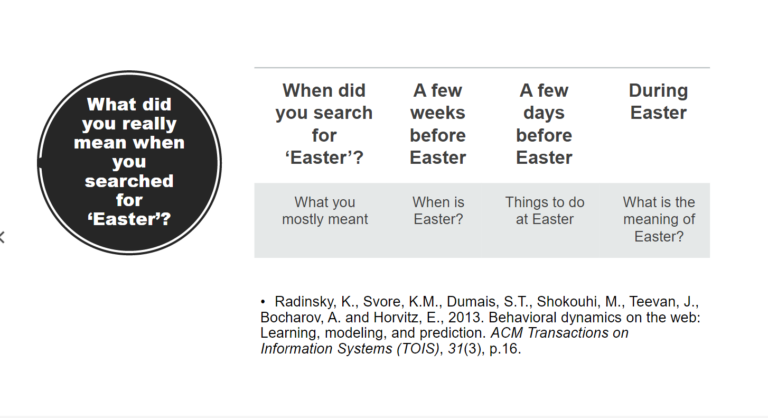

10. The User Is the Query

Dawn Anderson’s perspective on query analysis and audience intent is empowering for SEO and PPC professionals alike.

Way ahead of the curve on BERT, she empowers us to think about the choices we present our prospects and how much we are playing into their filters of interest.

In particular, she challenged us to think about:

- The impact of multi-meaning words like “like” and how context of timing, additional words, and people speaking them influences their meaning.

- How much users need to “teach” search engines what they mean most often via clicking on wrong answers.

- When head terms (“dress” “shoes” “computer”) can have super transactional intent, versus being high up in the funnel.

For example, “Liverpool Manchester” is most often a travel query, but when football is on, it turns into a sports query.

Anderson encourages us to focus on the future – specifically:

- Shifting away from text-heavy to visual enablement. We need to come from a place of curation (for example, hashtags) as opposed to verbatim keyword matching.

- Shifting away from query targeting and opting more into content suggestions based on persona predictions

- Shifting away from answers and weaving ourselves into user journeys (nurturing them to see us a habitual partner rather than a one-off engagement).

This session had the most cross-over implications for PPC – particularly because we have been shifting toward audience-oriented PPC campaigns for the past few years.

11. Ranking Factors Going Casual

I have so much love in my heart for a fellow digital marketer who sees board games as a path to explain and teach SEO/PPC.

This session gave a candid and empowering view on why we need to think critically about SEO studies.

Micha Fisher-Kirshner reminds us to be:

- Consistent with our data collection and be honest with ourselves on sample size/statistical significance.

- Mindful of positive and negative interactions and what impact they can have on our data sets.

- Organized in our categorizations and quality checks.

My favorite takeaway (based on Mysterium) is to be mindful of the onset of any study and be sure all the necessary checks are in place. Much like the game, it’s possible to set one’s self up to have a “no win” condition simply because we didn’t set ourselves up correctly.

I also have to give Fisher-Kirshner a shout out for coming at this from a place of positivity, and not “outing” folks who mess up these checks. Instead, he simply inspired all of us to chase better causation and correlation deduction.

12. Advanced Analytics for SEO

Analytics is the beating heart of our decisions – and getting to learn from this panel was a treat.

Our cast of players included:

- Dan Shure – Evolving SEO (host)

- Aja Frost – HubSpot

- Colleen Harris – CDK Global LLC

- Jim Gianoglio – Bounteous

- Alexis Sanders – Merkle

While each panelist had their own unique perspective, the overarching suggestion is sharing data between departments and working together to combat anomalies.

Gianoglio reminds us to be mindful of filters that might distort data and never allow a client to force us to a single guiding metric.

Frost shared her skepticism that analytics will be our single source for truth in the emerging GDPR and CCPA world as well as empowering us to explore data blending if we aren’t as confident in SQL to explore data blending.

Harris encouraged us to be pragmatic and realistic about data sources: if the data seems off, we should explore it! Analytics is a means to uncover data distortion.

Sanders encourages us to pull revenue numbers and marry analytics with tools like Screaming Frog and SEMrush to create true attribution for SEO’s impact on profit.

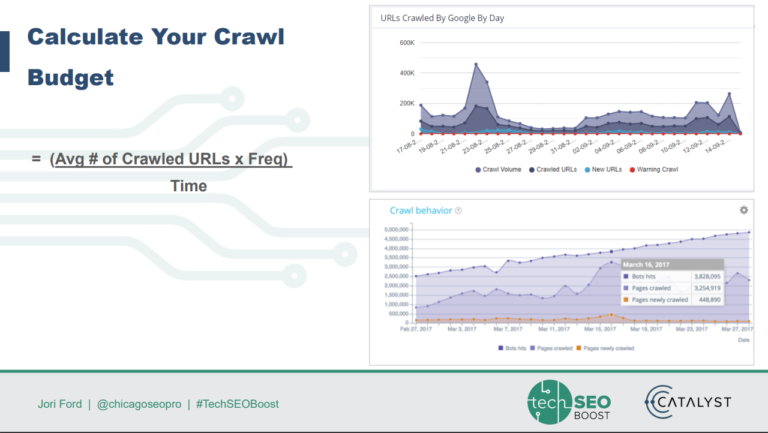

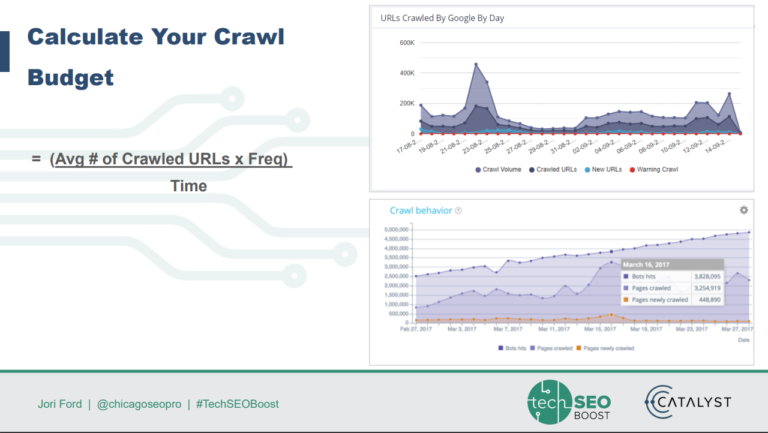

13. Crawl Budget Conqueror

Jori Ford outlined a really pragmatic approach to crawl budgets: honor your money pages and account accordingly!

Her four-step approach is:

- Determine the volume of pages and only use the sitemap to correlate if it’s an optimized site map.

- Understand which pages are being crawled naturally via custom tracking and log file analyzers (Botify, Deepcrawl, OnCrawl, etc.).

- Assess the frequency of pages crawled and how many pages are getting crawled frequently/infrequently.

- Segment by percentage type: page type, crawl allocation, active vs. inactive, and not crawled.

Understandably, we may still hit an insurmountable crawl budget need. Her advice on pruning low-profit pages and deindexing dupes.

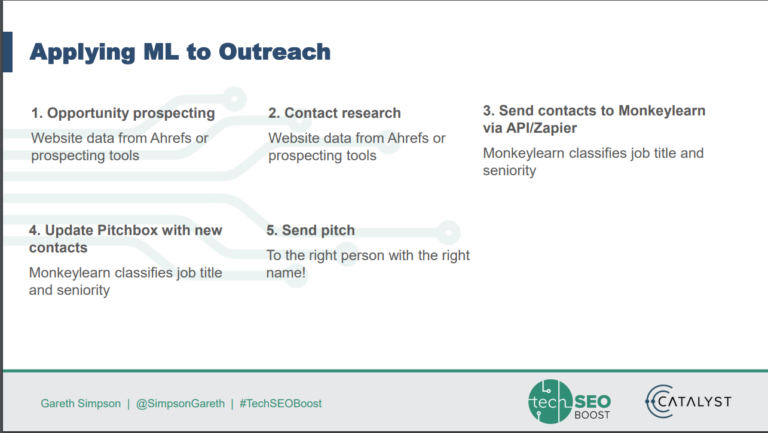

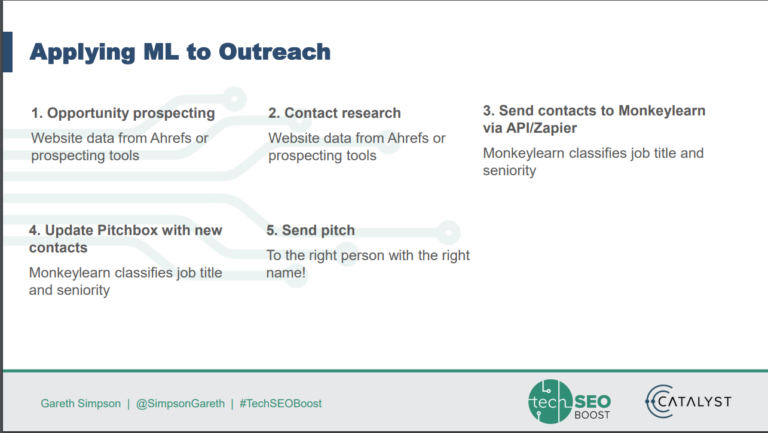

14. Leveraging Machines for Awesome Outreach

Gareth Simpson invites us to explore tasks we can delegate to AI and machine learning. However, before we can, we need to have practical workflows to build machine learning into our day.

Here are the paths to machine learning:

- Gather data from sources.

- Cleaning data to have homogeneity.

- Model building/Selecting the right ML algorithm.

- Gaining insights from the model’s results.

- Data visualization: transforming results into visual graphs.

Testing machine learning in prospecting might seem crazy (the human element of the relationship is crucial). Simpson helps us uncover delegatable tasks:

More Resources:

- Advanced Technical SEO: A Complete Guide

- A Technical SEO Checklist for the Non-Technical Marketer

- A 5-Step Guide to Diagnosing Technical SEO Problems

Image Credits

All screenshots taken by author from (TechSEOBoost slide decks), December 2019

Sorry, the comment form is closed at this time.