02 Sep Everything You Need to Know About the X-Robots-Tag via @natalieannhoben

There are a few types of directives that tell search engine bots what pages and other content search engine bots will be allowed to crawl and index. The most commonly referred to are the robots.txt file and the meta robots tag.

The robots.txt file tells search engines to crawl a specific part of your website, whether it be a page, a subfolder, etc.

This ultimately helps to produce a more optimized crawl by telling Google lesser important sections of the site that you do not want prioritized to be indexed.

Keep in mind, though, that search engine bots are not required to respect this file.

Another directive that is commonly used is the meta robots tag. This allows for indexation control at the page level.

A meta robots tag can include the following values:

- Index: Allows search engines to add the page to their index.

- Noindex: Disallows search engines from adding a page to their index and disallows it from appearing in search results for that specific search engine.

- Follow: Instructs search engines to follow links on a page, so that the crawl can find other pages

- Nofollow: Instructs search engines to not follow links on a page.

- None: This is a shortcut for noindex, nofollow.

- All: This is a shortcut for index, follow.

- Noimageindex: Disallows search engines from indexing images on a page (images can still be indexed using the meta robots tag, though, if they are to linked to from another site).

- Noarchive: Tells search engines to not show a cached version of a page.

- Nocache: This is the same as noarchive tag, but specific to the Bingbot/MSNbot.

- Nosnippet: Instructs search engines to not display text or video snippets.

- Notranslate: Instructs search engines to not show translations of a page in SERPs.

- Unavailable_after: Tells search engines a specific day and time that they should not display a result in their index.

- Noyaca: Instructs Yandex crawler bots to not use page descriptions in results.

However, there is another tag out there that allows for the issuing of noindex, nofollow directives.

The X-Robots-Tag differs from the robots.txt file and meta robots tag, though, in that the X-Robots-Tag is a part of the HTTP header that controls indexing of a page on the whole, in addition to specific elements on a page.

According to Google:

“Any directive that can be used in a robots meta tag can also be specified as an X-Robots-Tag.”

While you can set robots.txt-related directives in the headers of an HTTP response with both the meta robots tag and X-Robots Tag, there are certain situations where you would want to use the x-robots tag.

For example, if you were wanting to block a specific image or video, you could use the HTTP response method.

Essentially, the power of the X-Robots-Tag is that it is much more flexible than the meta robots tag.

Regular expressions can also be used, executing crawl directives on non-HTML files, as well as applying parameters on a larger, global level.

To further explain the difference between all of these directives, it is helpful to categorize them into the type of directives that they fall under. These are either crawler directives or indexer directives.

| Crawler Directives | Indexer Directives |

| Robots.txt – uses the user agent, allow, disallow and sitemap directives to specify where on site which search engine bots are allowed to crawl and not allowed to crawl. | Meta Robots tag – allows you to specify and prevent search engines from showing particular pages on a site in search results. Nofollow – allows you to specify links the should not pass on authority or PageRank ADVERTISEMENT CONTINUE READING BELOW X-Robots-tag – allows you to control how specified file types are indexed |

Real-World Examples and Uses of the X-Robots-Tag

To block specific file types, an ideal approach would be to add the X-Robots-Tag to an Apache configuration or a .htaccess file.

The X-Robots-Tag can be added to a site’s HTTP responses in an Apache server configuration via .htaccess file.

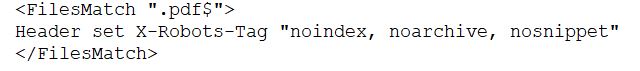

For example, let’s say we wanted search engines not to index .pdf filetypes. This configuration on Apache servers would look something like the below:

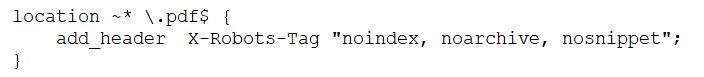

In Nginx, would look like the below:

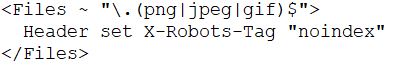

In a different scenario, let’s say we want to use the X-Robots-Tag to block the indexing of image files, such as .jpg, .gif, .png, etc. An example of this would look like the below:

Understanding the mix of these directives and their impact on each other is crucial.

Say that both the X-Robots-Tag and a Meta Robots tag are located when crawler bots discover a URL.

If that URL is blocked from robots.txt, then certain indexing and serving directives cannot be discovered and will not be followed.

If directives are to be followed, then the URLs containing those cannot be disallowed from crawling.

Check for an X-Robots-Tag

There are a few different methods that can be used to check for an X-Robots-Tag on the site.

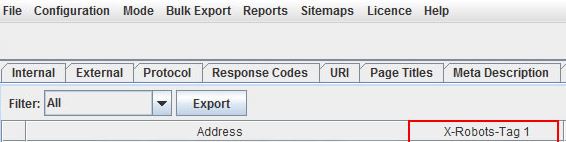

One method is through Screaming Frog.

After running a site through Screaming Frog, you can navigate to the “Directives” tab and look for the “X-Robots-Tag” column, and then see which sections of the site are using the tag, along with which specific directives.

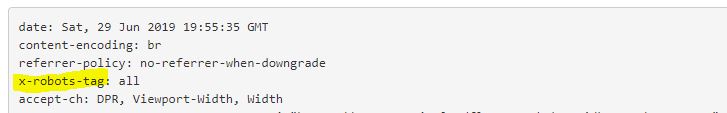

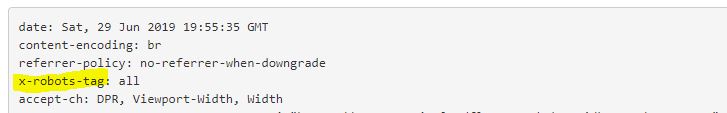

There are also a few different plugins out there, such as the Web Developer plugin, that allow you to determine whether an X-Robots-Tag is being used.

By clicking on the plugin in your browser and then navigating to “View Response Headers”, you can see the various HTTP headers being used.

To Sum Up

There are multiple ways to instruct search engine bots to not crawl certain sections or certain resources on a page.

Having an understanding of each and how they affect one another is crucial in order to avoid any major SEO directive-related pitfalls.

More Resources:

- Best Practices for Setting Up Meta Robots Tags and Robots.txt

- How to Address Security Risks with Robots.txt Files

- Advanced Technical SEO: A Complete Guide

Image Credits

Featured image & screenshots taken by author, June 2019

Sorry, the comment form is closed at this time.